Inference for unknown mean and known variance

For a sampling model we wish to make inference on assuming is known. The first step will be to derive an expression for which is proportional to . To following prior structure will be adopted:

Now we can derive an expression for .

Now let’s just work with the by itself.

To get this final expression into a quadratic form we can let , , and . In proportionality the term will drop from the exponent. Now we have

Which is recognizable as the kernel of a .

Inference for unknown mean and unknown variance

It is more reasonable to expect that in an actual data analysis if we are trying to estimate the mean of a normal population then we likely need to estimate the variance as well. The following parameterization is used by Hoff in A First Course in Bayesian Statistical Methods.

The posterior joint distribution of after observing the data is given by the following product.

This first term is the conditional posterior distribution of . Since it is conditional on (i.e. is “known”) it can be derived with a calculation identical to the one given in the previous section. Generalizing to a sample of size instead of size 1 and substituting for we get that

where . Hoff’s parameterization lends itself to a nice interpretation of and the mean of prior observations and as the mean of prior observations and new observations all together. Also, the variance of is .

Now to get the posterior distribution of after observing we need to integrate over all possible values of according to

Eventually, we get that or alternatively , where

We get another nice interpretation here of as the prior sample size with corresponding sample variance . Also is the sample variance and is the sample sum of squares.

normR

# devtools::install_github("carter-allen/normR")

library(normR)Monte Carlo Sampling

Example from Hoff pg. 76.

# parameters for the normal prior

mu_0 <- 1.9

kappa_0 <- 1

# parameters for the gamma prior

sigsq_0 <- 0.010

nu_0 <- 1

# some data

ys <- c(1.64,1.70,1.72,1.74,1.82,1.82,1.82,1.90,2.08)

n <- length(ys)

y_bar <- mean(ys)

ssq <- var(ys)

# posterior parameters

kappa_n <- kappa_0 + n

nu_n <- nu_0 + n

mu_n <- mu_n(kappa_0,mu_0,n,y_bar)

sigsq_n <- sigsq_n(nu_0,sigsq_0,n,ssq,kappa_0,y_bar,mu_0)mu_n## [1] 1.814sigsq_n## [1] 0.015324# posterior variance draws

sigsq_posterior_draw <- draw_sigsq_posterior(10000,nu_n,sigsq_n)

# posterior mean draws

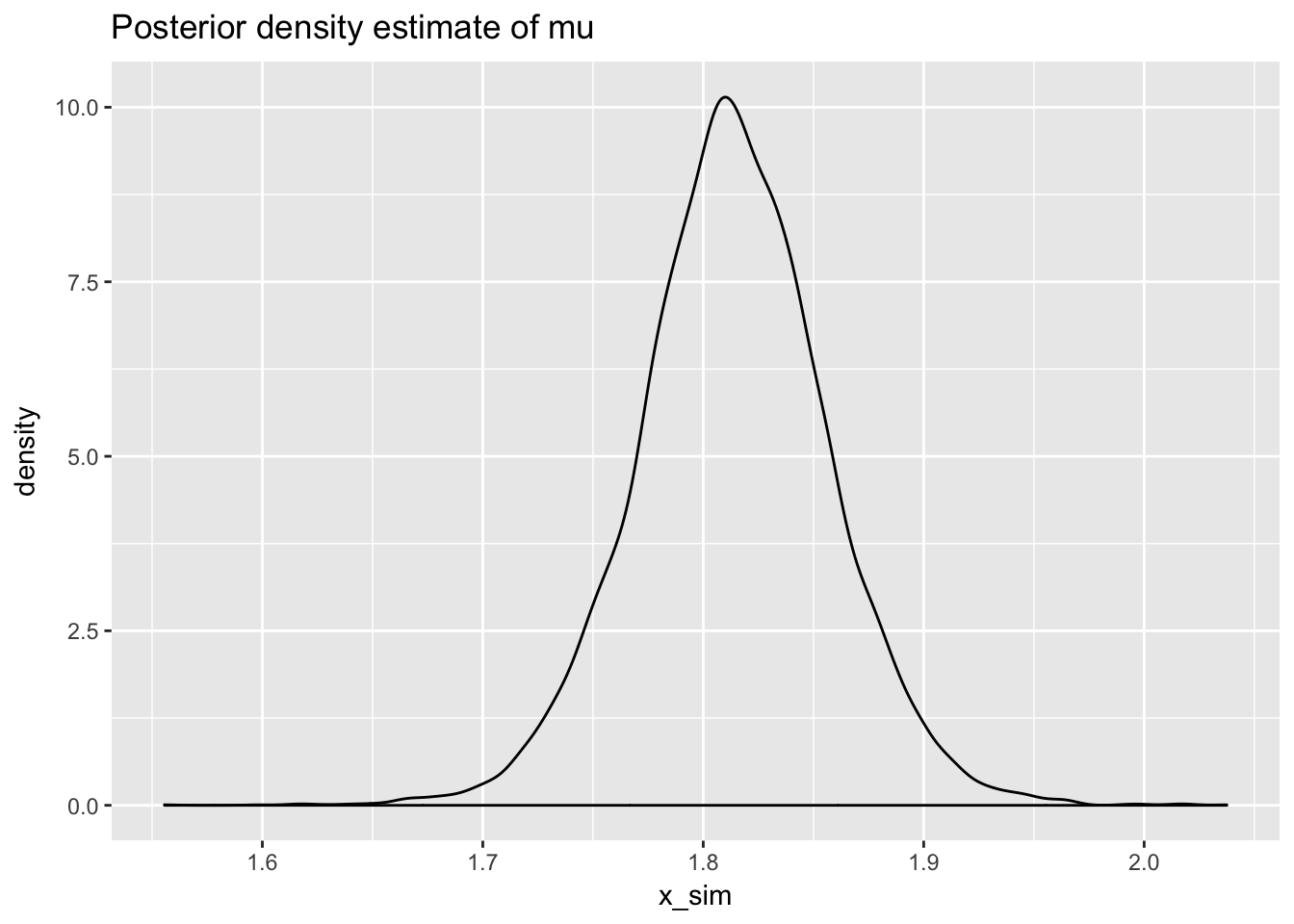

mu_posterior_draw <- draw_mu_posterior(mu_n,sigsq_posterior_draw,kappa_n)quantile(mu_posterior_draw,c(0.025,0.975))## 2.5% 97.5%

## 1.727323 1.899154# plot posterior draws

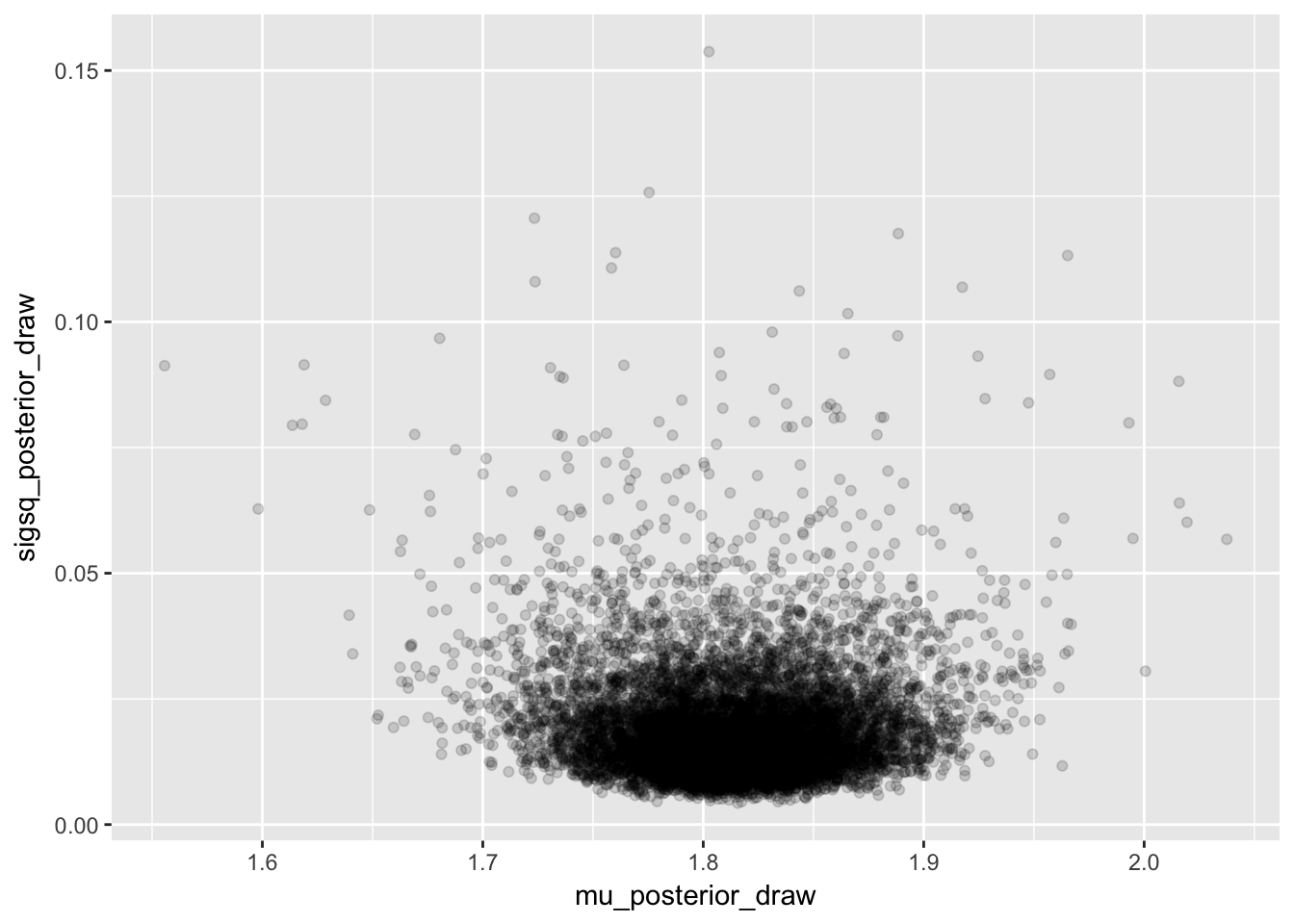

normR::plot_monte_sim(sigsq_posterior_draw,mu_posterior_draw)

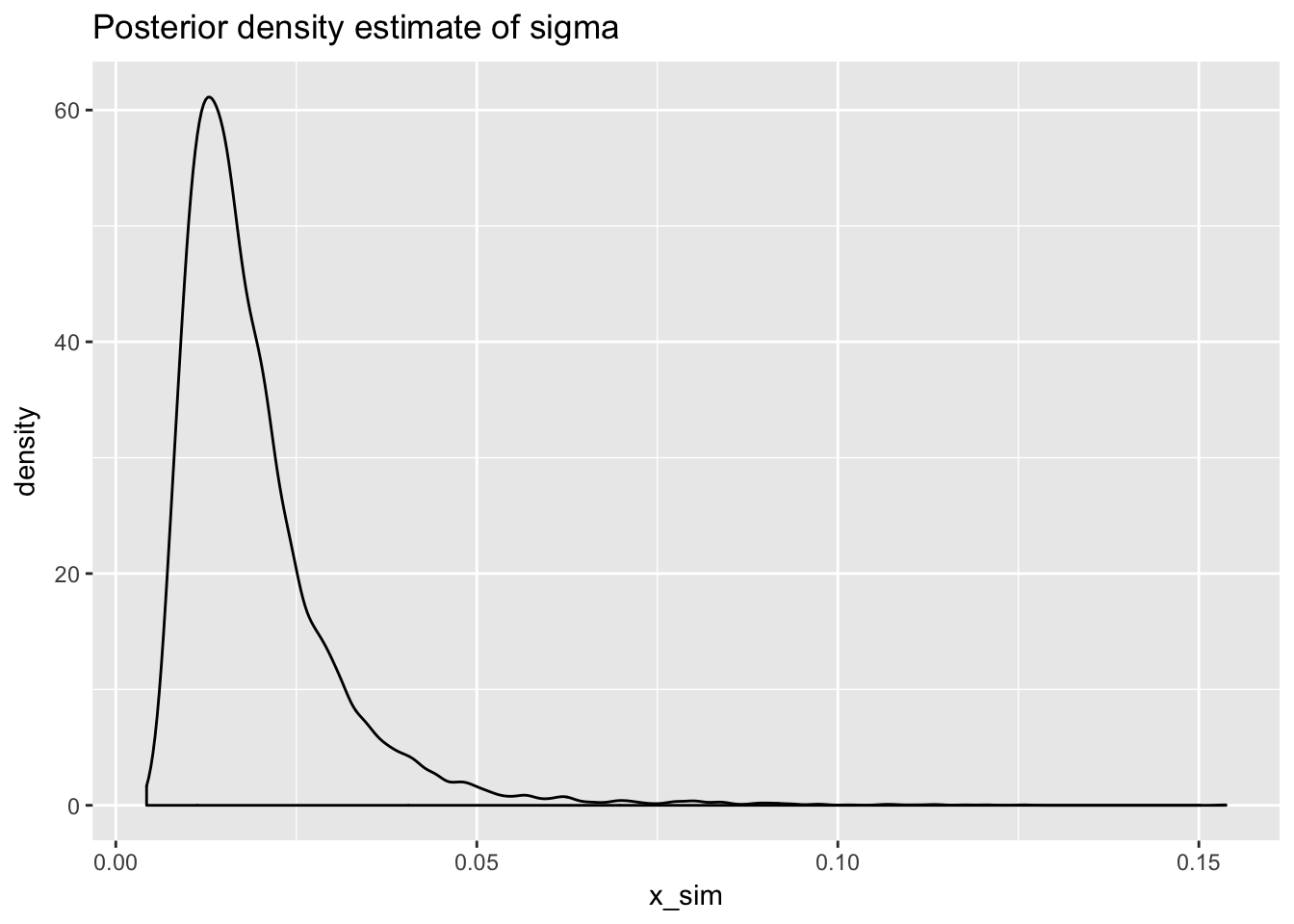

# plot posterior density estimate for sigma

normR::plot_posterior_density_estimate(sigsq_posterior_draw,

plot_title = "Posterior density estimate of sigma")

# posterior density estimate of mu

normR::plot_posterior_density_estimate(mu_posterior_draw,

plot_title = "Posterior density estimate of mu")