Bayesian Inference with Binary Data

The Beta-Binomial model is a classic Bayesian framework for working with dichotomous data. If we observe a Bernoulli process over a total of trials, where each trial has “success” probability , then the total number of successes in trials is a binomial random variable where .

The binomial random variable is known as the likelihood of this model. Now, if there is uncertainty about the parameter in the binomial model, we can treat as an additional random variable that follows a distribution where .

The beta distribution is the prior for this model. Given we know successes occured over trials of the Bernoulli process with success probablility , we can derive the posterior distribution for , that is, the distribution of that incorporates prior information and observed information, namely the quantity .

This expression has the form of the kernel of another beta distribution with parameters and

To find the predictive distribution of , , we first need the joint distribution of and , .

The prior predictive distribution = .

The above expression is the probability density function of a beta-binomial random variable with parameters . This is the distribution of averaged over all possible values of .

The last distribution of interest in this model is the posterior predictive distribution, of the predictive distribution of a new (call it ) an observation .

Since and are conditionally independent given ,

Following a similar derivation as that of the prior predictive, .

Useful R Functions

The betaR package includes some useful functions for working with the beta binomial model. The package can be found at https://github.com/carter-allen/betaR, and installed using the following command.

devtools::install_github("carter-allen/betaR")Example

library(betaR)Consider the situation in which we are we are modeling a team’s number of wins over a game season. The probability that the team wins any given game is probably not a fixed value, with variability coming from the quality of the opposing team, home-court advantage, and countless other factors. If the team is relatively good though, we might expect their probability of winning any given game to be around , but not fixed at .

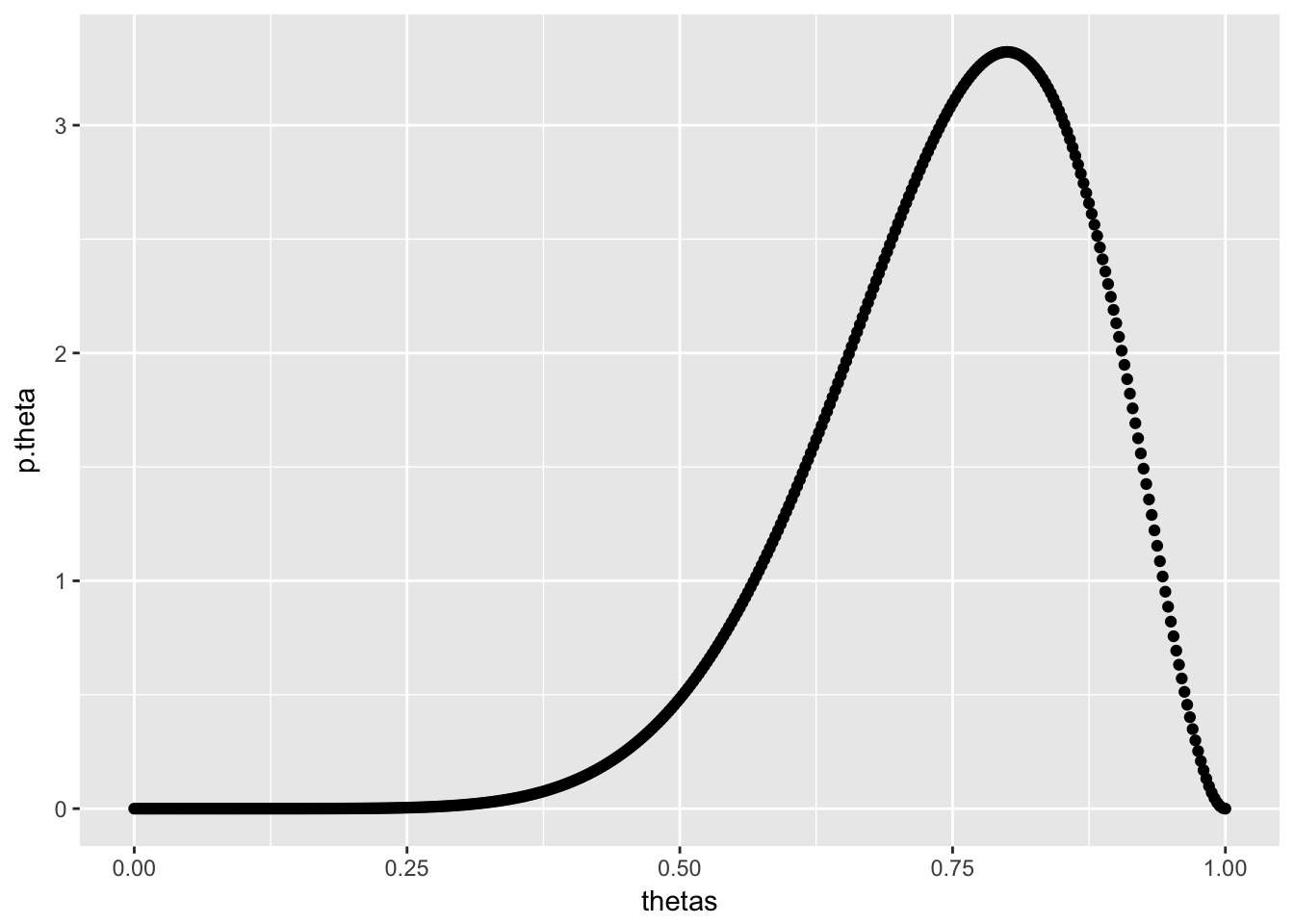

We can represent this prior belief by a beta distribtibution for with parameters and . These parameters can be thought of as the number of prior wins and prior losses respectively, and would be a reasonable assumption if the team went 9-3 last season. The prior distribution of is shown below.

dbeta_plot(alpha = 9, beta = 3)

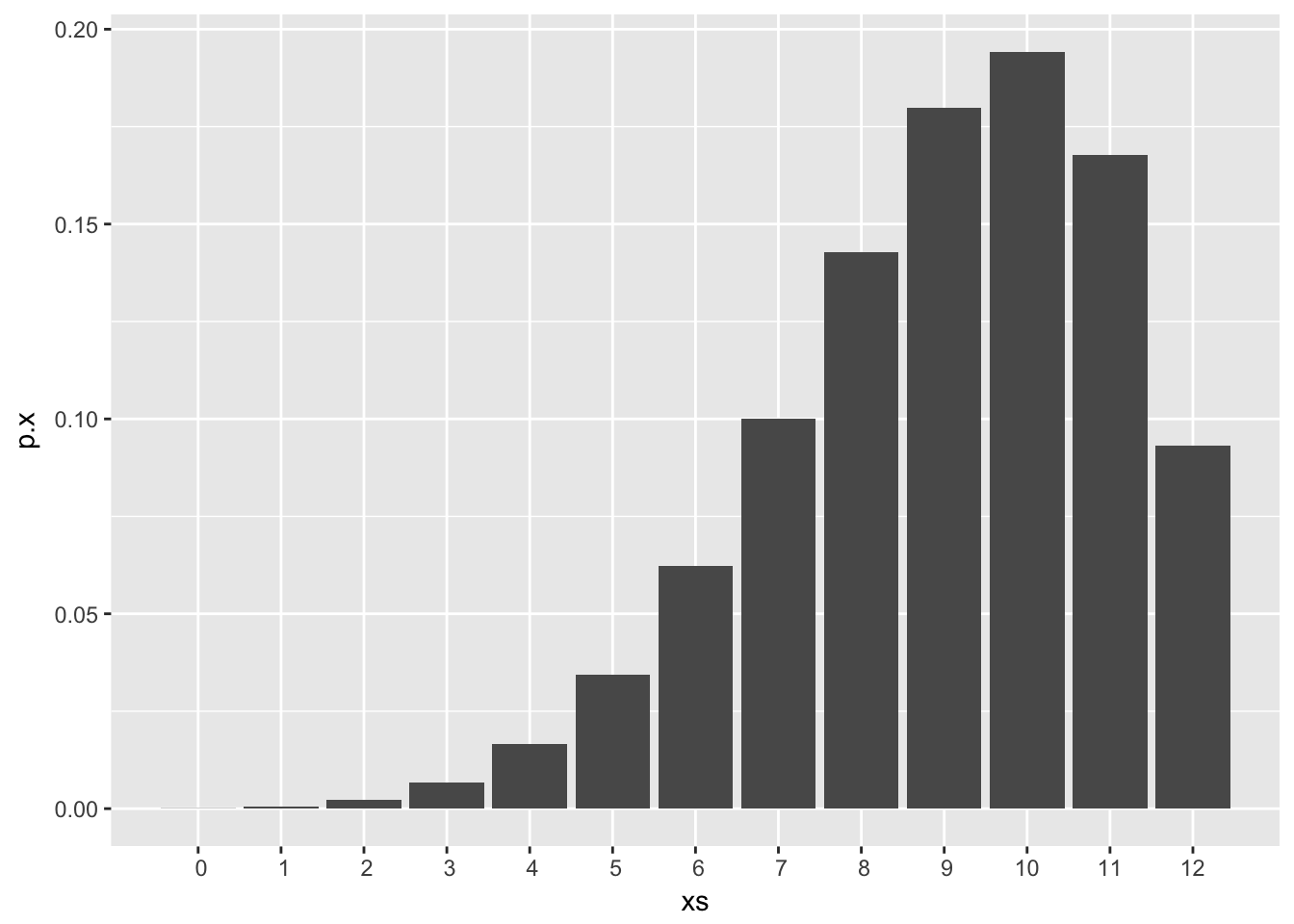

Now if we wanted to make a prediction about how the team will do this season, we can form a prior predictive distribution of , that is, the number of games we expect the team to win this season, given their 9-3 record last season. If we model each game by a distribution and keep our prior for , the prior predictive distribution of is a . The prior predictive distribution of is shown below. Note the similarity in shape to the prior.

dbb_plot(n = 12, alpha = 9, beta = 3)

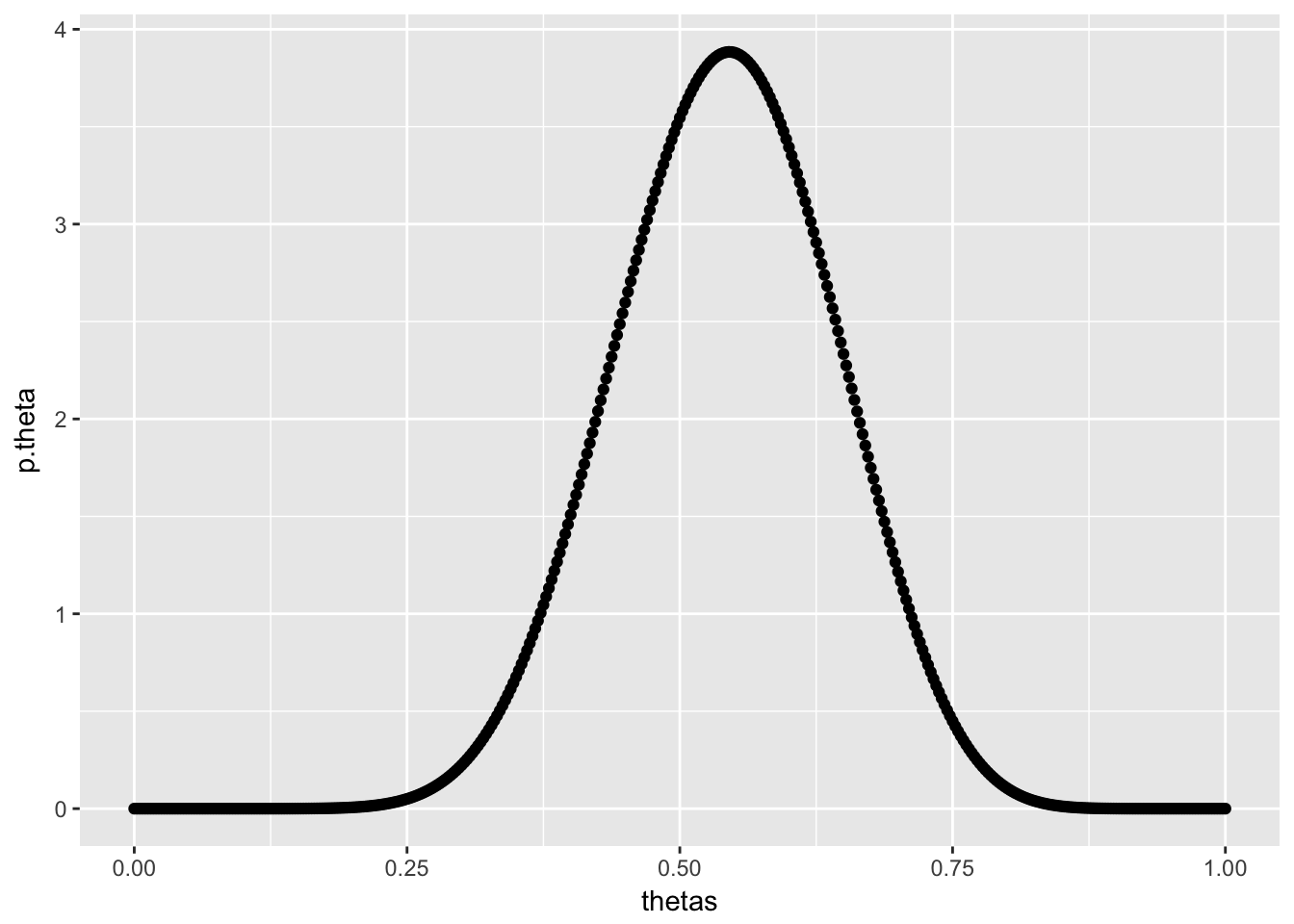

Let’s say that the season we are predicting ends up being much less successful than we expected, with the team going 4-8 over 12 games. Given this information, we can update our knowledge about , the probability that this team wins any given game. , which has the following distribution.

dbeta_plot(alpha = 4+9,beta = 12-4+3)

Our belief about the probability this team wins any given game has become a bit less optimistic given their dissapointing last season.

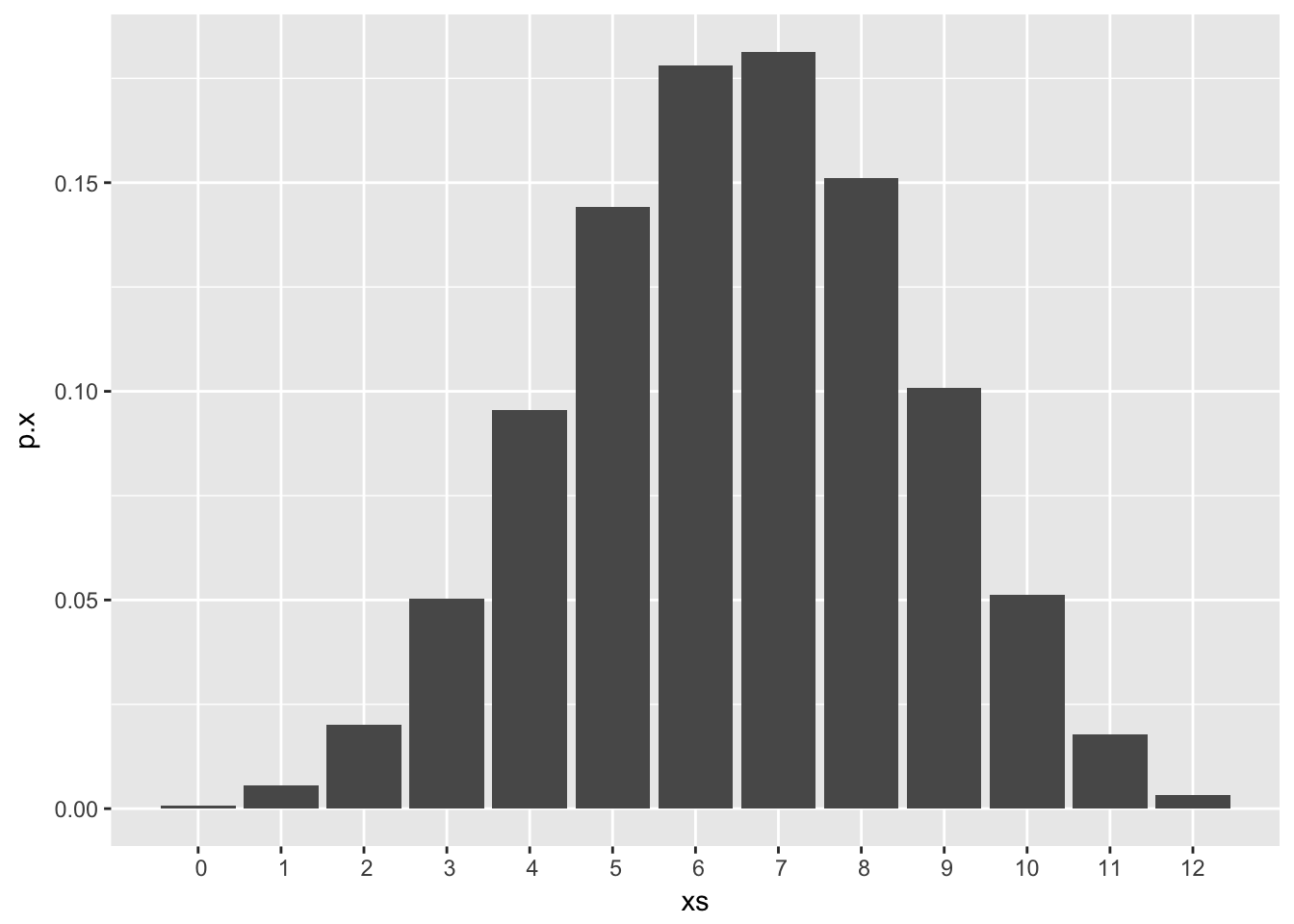

Finally, lets take into account all of this information from the past two seasons to get a posterior predictive distribution of a new 12 game season. From earlier,

dbb_plot(n = 12, alpha = 4+9, beta = 12-4+3)

Shiny App

The reactive app for visualizing the distributions discussed here under different parameter sets can be found at https://carter-allen.shinyapps.io/BetaBinomial/.